Fresh Data In, Fast Decisions Out

Most rule engines failed to gain adoption because database integration was weak. In today's fragmented data landscape, getting data in and decisions out isn't optional. It's the foundation.

Walk into any modern organization and you’ll find data everywhere. Postgres tables, Snowflake warehouses, Salesforce records, Kafka streams, third-party APIs, and yes, spreadsheets that someone insists are critical. The boundaries are unclear. The sources multiply. The formats conflict.

At the core of what we’re building at Inferal is a rule engine. So what does this fragmented data landscape have to do with us?

Here’s the uncomfortable truth about rule engines: the hardest part isn’t the inference. It’s getting fresh data in and pushing decisions out fast. The engine is the easy part. The pipes are where projects die.

Why Rule Engines Stayed Niche

Rule engines have been around for decades. Drools, CLIPS, the business rules management systems of the 2000s and 2010s. They could do sophisticated inference: forward chaining, backward chaining, Rete networks, conflict resolution. Impressive technology.

Most of them never gained mainstream adoption.

The usual explanation is that rules are hard to write, hard to debug, hard to maintain. That’s true, though AI and better tooling are rapidly changing this calculus. But there’s another reason nobody talks about: they couldn’t get data in fast enough, or push decisions out reliably.

These engines existed in silos. You loaded data into them manually, or through brittle custom integrations. Think of the loading dock metaphor: data arrived in trucks (batch exports), was unloaded (parsed and transformed), processed (rules executed), and results shipped out (written to files or databases). The whole cycle might take hours.

This was somewhat forgivable. The data stack was in its infancy. ETL was the dominant paradigm. Nightly batch processing was acceptable because that’s how everything worked. Rule engines became specialized tools for domains where someone invested heavily in custom pipelines: fraud detection, insurance underwriting, loan origination. Everywhere else, they gathered dust.

ETL Doesn’t Cut It Anymore

The world changed. Real-time expectations are now baseline. AI agents need context immediately, not six hours from now. Decisions that could wait for a nightly batch in 2010 need to happen in milliseconds in 2026.

In Understanding Agent-Native Data Systems, I described how request-response architectures break down for agents. The same principle applies to rule engines. If your rule depends on inventory levels, and those levels were last updated at midnight, your rule is operating on stale reality. You’re making decisions about a world that no longer exists.

The paradigm shift is Change Data Capture (CDC). Don’t batch-export data on a schedule. Stream changes as they happen. When a row updates in Postgres, that change should flow to the rule engine within seconds, not hours.

The world moved from batch to streaming. Rule engines that didn’t follow became relics.

Getting Data In Is Harder Than It Looks

“We support Postgres” is a bullet point on a marketing slide. Making it work reliably at scale is engineering.

Consider Postgres logical replication. The database writes changes to the Write-Ahead Log (WAL), and consumers can subscribe to those changes through replication slots. Sounds simple. It isn’t.

Replication slots accumulate WAL segments if the consumer can’t keep up. If your rule engine gets slow, if there’s a network blip, if processing takes longer than expected, the pg_wal directory fills up. The database itself degrades. Production systems have lost significant write throughput because a downstream consumer was lagging.

Schema changes create coordination problems. You’re streaming changes from a table, and someone runs ALTER TABLE ADD COLUMN. The replication stream now has a new schema. Does your consumer handle this gracefully? Does it require a restart? Does it lose data during the transition?

Edge cases multiply. Long-running transactions that span minutes. DDL statements that appear mid-stream. Initial snapshots that need to merge with real-time changes. Debezium and similar tools have spent years handling these cases, and they still have known limitations.

External APIs bring a different class of problems. Where CDC gives you a stream, APIs give you request-response: you pull, you don’t subscribe. That fundamental difference ripples through every layer of the integration.

Start with the obvious constraint: rate limits. You can’t just poll continuously, so you need backpressure handling, retry logic, and request prioritization. When APIs charge per call, budget tracking becomes a reliability concern because runaway loops generate invoices, not just CPU load. Then there’s pagination, which seems simple until you realize offset-based approaches drift when records change mid-traversal, while cursor-based ones require you to persist state across requests and handle cursor expiration gracefully.

Authentication adds its own lifecycle to manage. OAuth tokens expire, refresh flows can fail, and API keys get rotated. An integration that worked reliably for months breaks silently when a token expires overnight, and by the time anyone notices, hours of data are missing.

The deeper challenge is incremental sync. CDC gives you a replay log of every change, in order. Most APIs don’t. You can poll for current state, but you can’t ask “what changed since yesterday?” unless the API explicitly supports it. Without that, you’re left comparing snapshots, detecting drift, and handling deletions you were never notified about. Webhook delivery helps when available, but it’s best-effort: miss one, and it’s gone. Network blips, version changes, third-party bugs. Every external dependency is a reliability problem in disguise.

Decisions That Go Nowhere

Data flows in. Rules fire. Then what?

If the output sits in the rule engine’s internal state, it’s useless. A decision that doesn’t affect the world isn’t a decision. It’s a simulation.

Actions need to flow out: update a record, trigger a workflow, notify a system, activate an agent. Getting decisions out is as critical as getting data in, and it has its own failure modes.

Idempotency: what happens if the action fires twice? If your rule sends a notification, and the network times out before acknowledgment, does it retry? Does the user get two notifications? You need deduplication, or you need idempotent operations. Neither is free.

Ordering: rules can fire in parallel, but some outputs need sequencing. If rule A debits an account and rule B credits it, order matters. If they’re processed out of sequence, the account goes negative.

Acknowledgment: how do you know the downstream system received the output? At-least-once delivery means possible duplicates. Exactly-once requires coordination protocols that add latency. Pick your tradeoff.

The last mile problem: you’ve done sophisticated inference, correlated signals, identified a pattern worth acting on. The result gets lost because a webhook timed out. All that work, discarded.

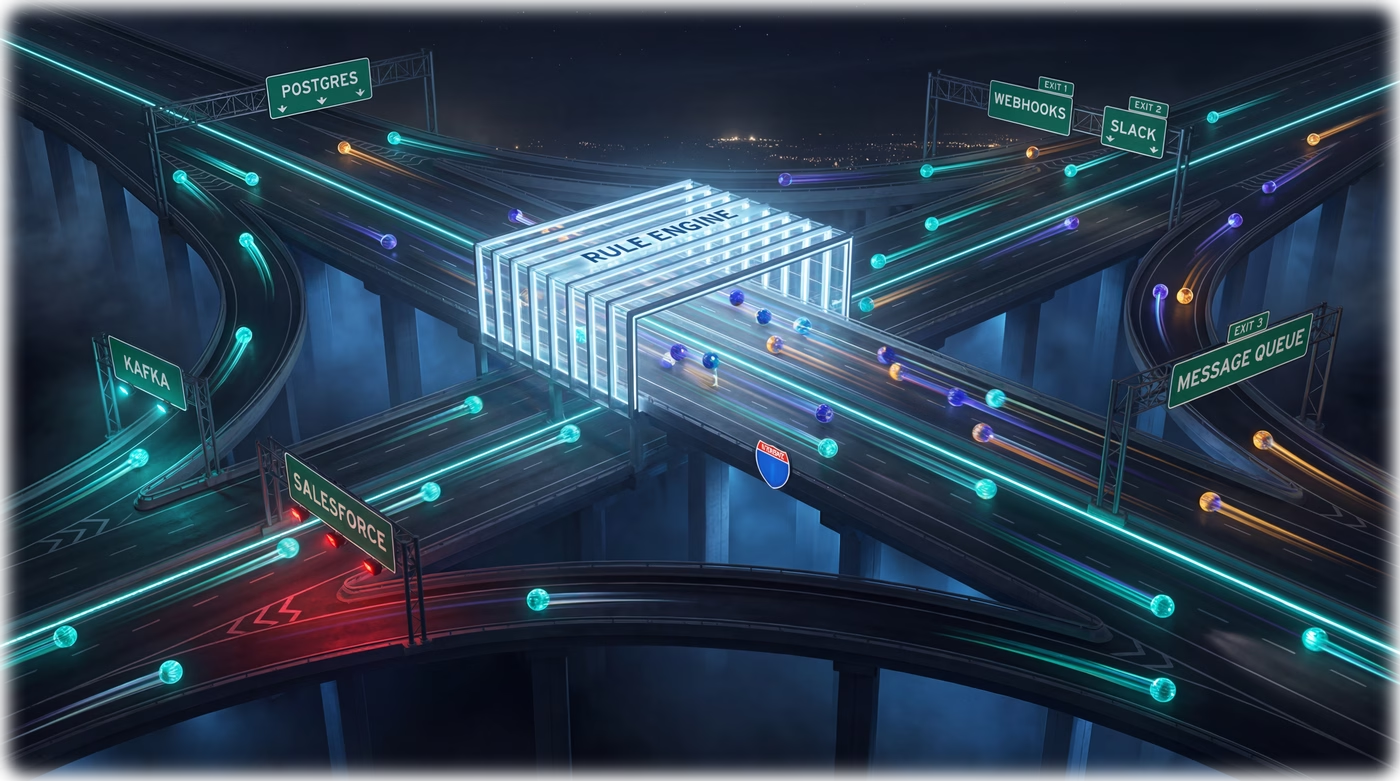

Ramps, Not Just Pipes

“Pipes” suggests passive flow. Water goes in one end, comes out the other. The pipe doesn’t care what’s in it.

Real integration is more like highway ramps. On-ramps and off-ramps. Active traffic engineering.

On-ramps are adapters that understand specific data sources. They know that Postgres sends changes in a certain format. They know that Salesforce has API quirks. They handle backpressure. They translate heterogeneous inputs into a common format the engine can process.

Off-ramps are routers. They direct decisions to the right destinations. They handle failures: if the target is down, do we queue? Do we retry? Do we alert? They confirm delivery. They maintain ordering guarantees where needed.

The rule engine itself is the highway: fast parallel processing, pattern matching, condition evaluation. But without well-designed ramps, traffic backs up at the entrance or gets lost at the exit.

Integration isn’t plumbing. It’s traffic engineering.

What This Means for Rule Engines

A rule engine that treats integration as someone else’s problem will remain niche. The enterprise will build custom pipelines around it, adding months to every deployment. Startups will choose simpler solutions that actually connect to their data.

The successful rule engine of 2026 needs:

- First-class CDC support: not “you can connect to Kafka” but “we handle Postgres replication slots, schema evolution, and initial snapshots.”

- Source-specific adapters: built-in handling for common edge cases, not just generic connectors.

- Robust egress: delivery guarantees, idempotency, ordering where needed.

- Observability: where is data stuck? What’s lagging? What failed? The integration layer needs monitoring as much as the inference layer.

This is what we’re building at Inferal. Not just inference, but the complete data flow. The same principle from agent-native architecture applies: agents need context delivered, not fetched. Rules need data pushed, not pulled. The integration layer isn’t a feature. It’s the foundation.

In a world of fragmented data, the bottleneck isn’t the rule logic. The bottleneck is getting the right data to the right place at the right time. Fresh data in, fast decisions out. Build the ramps.